Author : Vivek Vardhan Varanasi

Talend Interviews will be categorized on certain topics

- Talend ETL

- Talend Data Integration

- Talend administration

- Talend ESB

- Talend Big Data

- Talend Cloud

Talend Data Integration

Explain various connections available in Talend?

The connections define if the data has to be a data output, processed or a logical sequence. Various connections are:

- Row: The Row connection deals with the actual data flow. Following are the types of Row connections supported by Talend:

- Main

- Lookup

- Filter

- Rejects

- ErrorRejects

- Output

- Uniques/Duplicates

- Multiple Input/Output

- Iterate: The Iterate connection is used to perform a loop on files contained in a directory, on rows contained in a file or on the database entries.

- Trigger: The Trigger connection is used to create a dependency between Jobs or Subjobs which are triggered one after the other according to the trigger’s nature. Trigger connections are generalized in two categories:

- Subjob Triggers

- OnSubjobOK

- OnSubjobError

- Run if

- Component Triggers

- OnComponentOK

- OnComponentError

- Run if

- Subjob Triggers

- Link: The Link connection is used to transfer the table schema information to the ELT mapper component.

What schemas are supported by Talend?

The following schemas are supported:

- Generic schema: It is not tied to any particular source and also used as a sharable resource across different data sources.

- Fixed schema: Read-only schemas which come predefined with some components.

- Repository Schema: Schema is reusable and any changes made in the schema will be reflected in all the jobs.

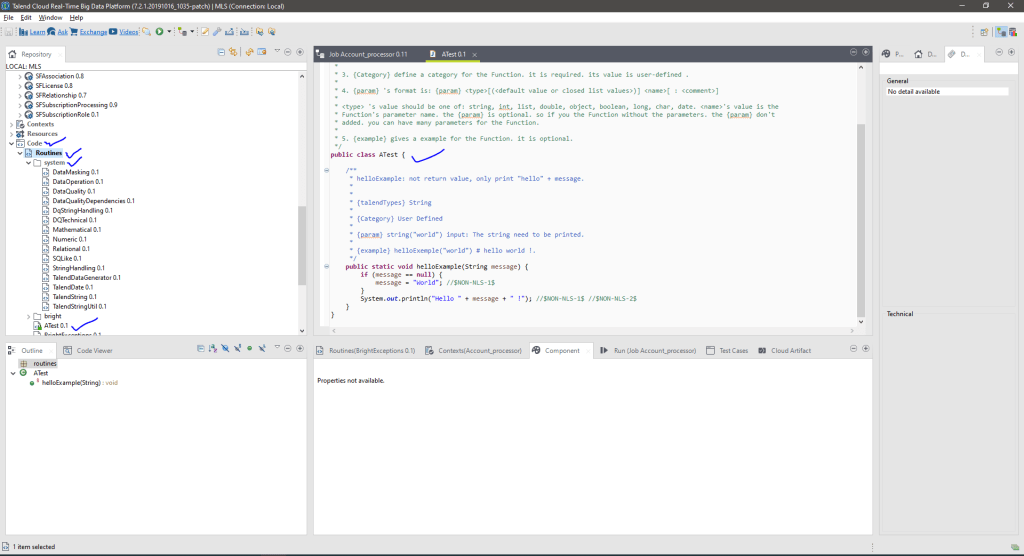

What are routines?

They are the reusable pieces of which can be used to optimize data processing by making use of custom code. It also helps in enhancing the Talend Studio features and also improves job capacity. There are basically two kinds of routines: User routine and System routine.

User routine: Custom created a routine by the users either by making new ones or using the existing old ones.

What is a sub job? How data is sent from the parent job to the child job?

A sub job is defined as a single component or more than one component joined by a data flow. One job can at least have one sub job. Context variables should be used while passing a value from the parent to the child job.

Explain tMap component and also list down the different functions which can be performed by making its use?

This is the most asked Talend Interview Questions in an interview. tMap is one of the essential components which forms a core part of the “processing” family. The main use is to map the input data with the output data. The main functions which can be performed by tap include:

- Applying transformation rules on any kind of field.

- Adding or removing columns

- Reject data

- Filter input and output data using constraints

- Concatenate and interchanging of the data

- Multiplexing and demultiplexing of data

Explain tDenormalizeSortedRow. Also, can we use Binary Transfer mode or an ASCII code in creating an SFTP connection?

tDenormalizeSortedRow forms an integral component of the processing family. It is used to synthesize sorted input flow such that the memory is saved. All input sorted rows are combined in a group where the item separators are joined with distinct values. No, the transfer modes cannot be used while creating an SFTP connection. It is just an extension to SSH and therefore doesn’t support any kind of transfer modes.

Error handling in Talend

The following is the error handling process:

- Exception throwing process can be relied upon which can also be seen in the run view of the red stack trace.

- Every component and the sub job has to return the code which leads to additional processing. The OK/Error links can be used to redirect the error towards an error handling routine.

- The best and the most trusted way to handle an error is to define an error handling subjob which gets called in case of an error.

Its a good practice to handle errors on subjob and on component.

To print error details from a component , click on component and check the outline to see error response.

Example from the below :

((String)globalMap.get(“tRowGenerator_1_ERROR_MESSAGE”))

Differentiate between ‘OnComponentOk’ and ‘OnSubjobOk’.

| OnComponentOk | OnSubjobOk |

| 1. Belongs to Component Triggers | 1. Belongs to Subjob Triggers |

| 2. The linked Subjob starts executing only when the previous component successfully finishes its execution | 2. The linked Subjob starts executing only when the previous Subjob completely finishes its execution |

| 3. This link can be used with any component in a Job | 3. This link can only be used with the first component of the Subjob |

Differentiate between ‘Built-in’ and ‘Repository’.

| Built-in | Repository |

| 1. Stored locally inside a Job | 1. Stored centrally inside the Repository |

| 2. Can be used by the local Job only | 2. Can be used globally by any Job within a project |

| 3. Can be updated easily within a Job | 3. Data is read-only within a Job |

What are Context Variables and why they are used in Talend?

Context variables are the user-defined parameters used by Talend which are passed into a Job at the runtime. These variables may change their values as the Job promotes from Development to Test and Production environment. Context variables can be defined in three ways:

- Embedded Context Variables

- Repository Context Variables

- External Context Variables

Differentiate between ETL and ELT.

| ETL | ELT |

| 1. Data is first Extracted, then it is Transformed before it is Loaded into a target system | 1. Data is first Extracted, then it is Loaded to the target systems where it is further Transformed |

| 2. With the increase in the size of data, processing slows down as entire ETL process needs to wait till Transformation is over | 2. Processing is not dependent on the size of the data |

| 3. Easy to implement | 3. Needs deep knowledge of tools in order to implement |

| 4. Doesn’t provide Data Lake support | 4. Provides Data Lake support |

| 5. Supports relational data | 5. Supports unstructured data |

Differentiate between the usage of tJava, tJavaRow, and tJavaFlex components.

| Functions | tJava | tJavaRow | tJavaFlex |

| 1. Can be used to integrate custom Java code | Yes | Yes | Yes |

| 2. Will be executed only once at the beginning of the Subjob | Yes | No | No |

| 3. Needs input flow | No | Yes | No |

| 4. Needs output flow | No | Only if output schema is defined | Only if output schema is defined |

| 5. Can be used as the first component of a Job | Yes | No | Yes |

| 6. Can be used as a different Subjob | Yes | No | Yes |

| 7. Allows Main Flow or Iterator Flow | Both | Only Main | Both |

| 8. Has three parts of Java code | No | No | Yes |

| 9. Can auto propagate data | No | No | Yes |

Scenario based interview questions

- How do you design a Talend job end to end with test cases for the following functionality – I need to get list of people who are eligible for company bonus in 2019 where employee should be joining before April 2019. Resources available for the above problem :

- One file with list of all employees (id, first name, last name, address )

- One file ( id, date of joining )

- How do you design a Talend job where you need to bring data from different sources and apply your own math and final data should be cleansed.

- How do you lookup the incoming set 1 million records with 20 million records in database.

- How to you design Talend job to be fail proof ? How should your design look like to handle Change data capture ( CDC ), restart mechanism, handle failure conditions, how does your job know where to resume from?

- If you are working on rest api with pagination, how do you design your Talned job to handle

- pagination

- Failure call

- If response time is really slow

- filtering

- data quality?

What are some of good practices you implemented in your previous project and how is it helpful

1. Remove Unnecessary fields/columns ASAP using tFilterColumns component.

2. Remove Unnecessary data/records ASAP using tFilterRows component

3. Use Select Query to retrieve data from database

4. Use Database Bulk components

5. Store on Disk Option

6. Allocating more memory to the Jobs

7. Parallelism

8. Use Talend ELT Components when required

9. Use SAX parser over Dom4J whenever required

10. Index Database Table columns

11. Split Talend Job to smaller Subjobs

Hi Vivek can you please post answers for above questions

How do you design a Talend job end to end with test cases for the following functionality – I need to get list of people who are eligible for company bonus in 2019 where employee should be joining before April 2019. Resources available for the above problem :

One file with list of all employees (id, first name, last name, address )

One file ( id, date of joining )

How do you design a Talend job where you need to bring data from different sources and apply your own math and final data should be cleansed.

How do you lookup the incoming set 1 million records with 20 million records in database.

How to you design Talend job to be fail proof ? How should your design look like to handle Change data capture ( CDC ), restart mechanism, handle failure conditions, how does your job know where to resume from?

LikeLike

Hi Rajesh,

Looks like this is your company requirement. But here my solution.

In Talend you need to build a mechanism which can restart based on time stamp or last updated or last modified time stamp.

In this problem , the best way to implement is using your SQL queries and Talend data ingestion using file’s.

Step 1 : Use talend file import to import data to a table. Lets say first table is employees

Step 2 : Use another talend file import to import id and date of joining, when u import, make sure the date time is inserted as one more column.

Step 3 : use your SQL skills to join tables and do a look up. This will give you query to filter base don data of joining > 01-01-2019 00:00:00

Step 4 : use the above sql , call it via Talend.

This way , you are using Talend for data ingestion of files, querying for join’s and operations. So performance will be good enough.

Talend will be used to kick off those queries and will be useful to schedule , run and update statistics. For fail proof you need to design on component errors or on sub job error’s. This will handle all error handling.

for CDC, you can use the timestamp to compare the last modified or last updated time < current time stamp. Get only records which are greater than last run.

LikeLike

Definitely, what a splendid blog and informative posts, I will bookmark your blog.Have an awsome day!

LikeLike